Why Inference Stability

The Failure Mode the AI Stack Cannot See

Most AI failures in production are not training failures.

They are runtime failures - emergent instability during inference under recursion, tool use, and long-horizon interaction.

Systems run longer.

They act autonomously.

They loop, plan, remember, and adapt.

Over time:

behavior drifts

reasoning degrades

identities fragment

and failures emerge that appear sudden

They are not sudden.

They are structural.

SubstrateX exists to address this exact problem.

Inference Is Not Stateless

Inference is commonly treated as a feed-forward step that “just produces tokens.”

That assumption no longer holds.

Under sustained use, AI systems behave like dynamical systems.

They accumulate context.

They recurse on prior states.

They interact with tools and environments.

What emerges is not a series of independent outputs, but motion through time.

And motion has structure.

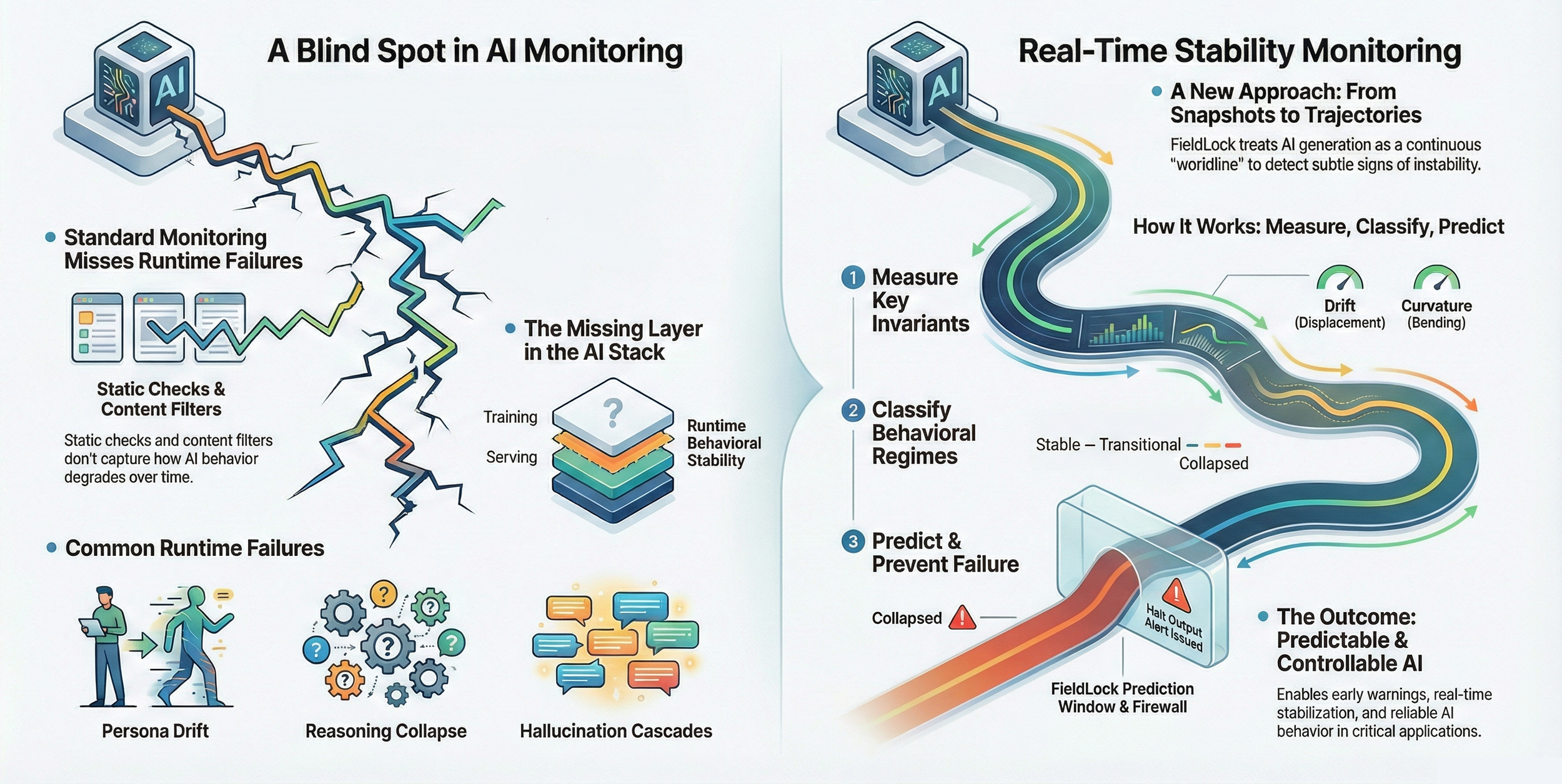

The Blind Spot in Today’s Stack

Today’s observability stack can tell you:

cost

latency

throughput

error rates

It cannot answer the only question that matters in production:

Is this system becoming unstable right now?

There is no early-warning layer for inference-time instability.

Failure is discovered after it happens — by users, auditors, or incident response.

That is the gap SubstrateX exists to close.

From Folklore to Measurement

Over the past year, a focused research program formalized what many teams experience but cannot measure:

Inference-phase instability is predictable.

Drift, rigidity, regime transitions, and collapse do not occur randomly.

They follow consistent, measurable patterns.

Critically:

these patterns are model-agnostic

they generalize across substrates

and they can be detected using output-only telemetry

The same stability signals replicate across transformer inference and substrate-independent dynamical systems.

The core technical uncertainty is resolved.

The dynamics are mapped.

The invariants replicate.

The instruments exist.

The Leverage Point

Once inference behavior becomes measurable as dynamics, a conclusion follows:

Stability becomes an engineering problem - not a matter of hope.

If failure has precursors:

it can be detected early

it can be forecast

and it can be governed

This is the leverage point SubstrateX is built on.

What FieldLock is

FieldLock™ is predictive monitoring for inference stability.

It sits:

above inference stacks (local runtimes and cloud APIs)

below application logic and orchestration

It is:

model-agnostic

output-only

inline and low-latency

deployable without retraining or architectural change

FieldLock turns runtime failure from a surprise into a forecast.

This is not a safety layer added after generation.

It is infrastructure for inference itself.

Why This Matters Now

AI systems are rapidly becoming:

long-running

agentic

tool-using

enterprise-critical

regulator-visible

Runtime instability scales faster than training-time fixes.

This is how new infrastructure layers always emerge:

metrics existed before observability platforms

logs existed before structured logging

errors existed before reliability standards

Inference stability is next.

Not because it is elegant —

but because it is required.

Execution Posture

SubstrateX is no longer exploratory.

Research risk is retired.

The focus is execution:

hardening FieldLock™ for production

private pilots with real long-horizon workloads

integration with monitoring, governance, and agent platforms

standardizing inference-phase health signals

If you are building or deploying:

agentic systems

enterprise AI platforms

regulated AI workflows

long-horizon copilots

you already know the failure modes.

FieldLock is built for the part of the stack you cannot currently see.