Validation Pilot

Decision-Grade Runtime Stability Evidence

What the Validation Pilot Is

The SubstrateX Validation Pilot is a time-boxed, governed engagement designed to answer one operational question:

Is runtime inference instability present in your system - and can it be detected early enough to matter?

This is not a demo.

This is not exploratory research.

This is not a generic evaluation.

It is read-only, inference-phase instrumentation applied to a real system, under real workload conditions, to produce evidence you can act on.

The Validation Pilot is the required prerequisite for:

ESL reporting

FieldLock infrastructure deployment

any ongoing stability or governance program

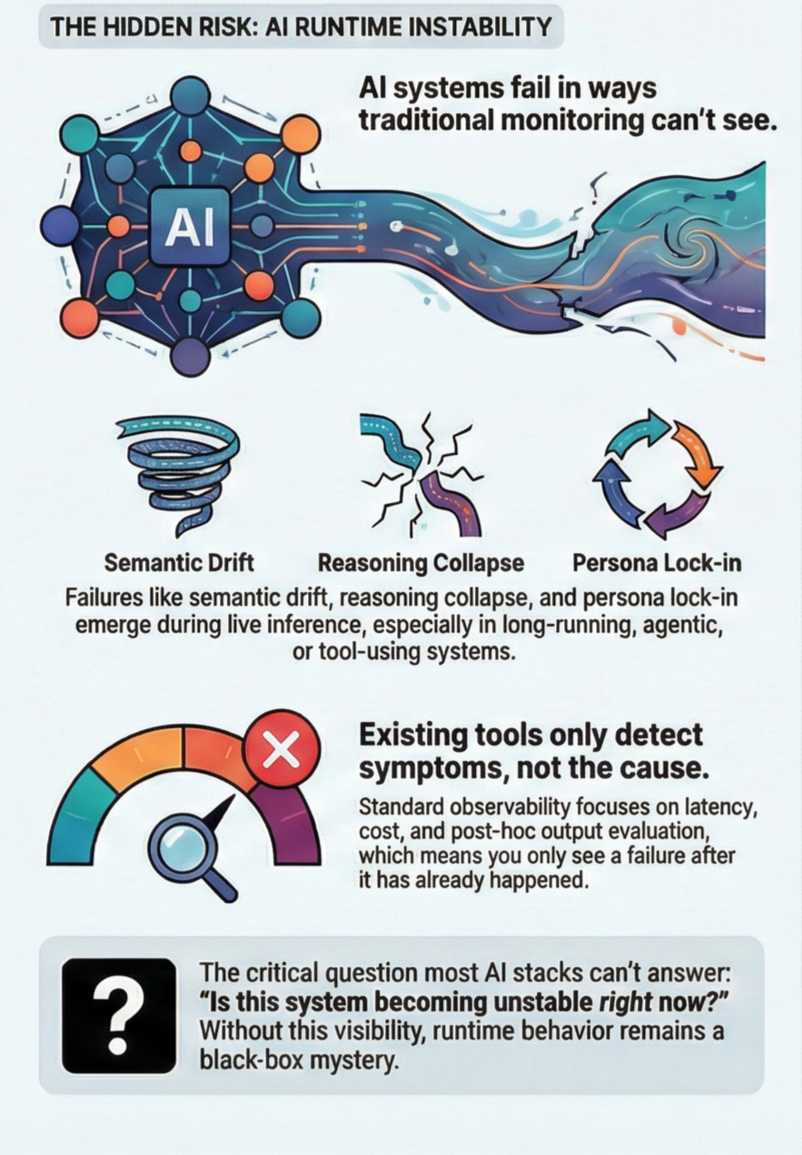

Why the Validation Pilot Exists

Most organizations know AI systems can fail at runtime.

Very few can prove:

whether instability is actually present

when it begins

whether it is observable without internal access

or whether intervention would be possible in time

The Validation Pilot exists to replace assumptions with measurement.

The outcome is not a dashboard.

The outcome is a defensible decision artifact.

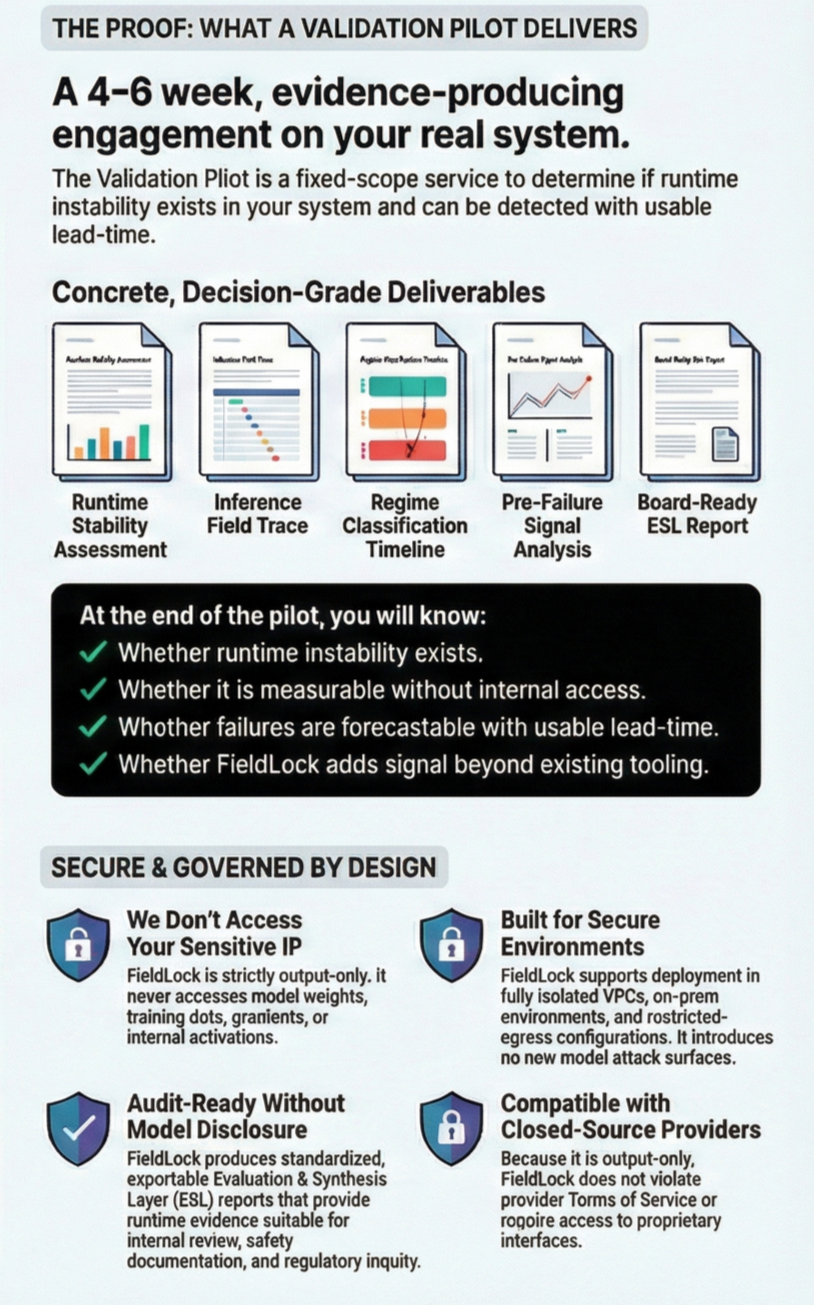

What the Pilot Delivers

Each Validation Pilot produces a fixed, governance-ready output set.

-

A system-level characterization of:

stability under sustained inference

where Stable, Transitional, Phase-Locked, Collapse, and Recovery regimes occur

how behavior shifts with horizon, recursion, and tool use

This establishes whether instability exists - and where.

-

A reconstructed, time-ordered worldline of runtime behavior derived from:

output-only telemetry

controlled probe runs (when appropriate)

No weights.

No training data.

No internal activations.This trace is the empirical backbone of all downstream analysis.

-

Identification of:

regimes observed

transition triggers

dwell times and recurrence patterns

This timeline compresses directly into standardized ESL form.

-

Analysis of deformation patterns that reliably appear before:

hallucination spikes

agent runaway

reasoning collapse

catastrophic mode-lock

This defines usable lead-time, not post-mortems.

-

A governed ESL bundle that:

summarizes regime distribution and stability posture

presents risk and readiness tiers

is structured for engineering, risk, and regulatory audiences

is comparable across future runs and systems

This report is exportable, auditable, and board-ready.

-

Analysis of deformation patterns that reliably appear before:

hallucination spikes

agent runaway

reasoning collapse

catastrophic mode-lock

This defines usable lead-time, not post-mortems.

Scope & Constraints

To preserve signal quality and interpretability, each pilot is intentionally narrow:

one system (model or model stack)

one workload class (agent, chat, planning, tool use, etc.)

one deployment context (staging or defined production slice)

This keeps operational risk low and conclusions defensible.

What the Validation Pilot Does Not Do

To be explicit, the Validation Piloat does not involve:

access to model weights

access to training data

inspection of internal activations

modification of inference behavior

alignment tuning or policy control

The pilot runs in strict observe-only mode.

Measurement precedes intervention.

First we prove instability is visible. Only then do we discuss control.

Duration & Structure

Typical duration: 4–6 calendar weeks

Phase 1 — Scoping & Alignment

System selection, workload definition, governance constraintsPhase 2 — Live Observation

Read-only instrumentation alongside normal operation or replayPhase 3 — Analysis & Classification

Regime extraction, signal validation, normalizationPhase 4 — Reporting & Review

Delivery of Runtime Stability Assessment and ESL bundle

Joint review with engineering, risk, and governance stakeholders

Who the Validation Pilot Is For

The Validation Pilot is appropriate if you:

operate long-running, agentic, or tool-using AI systems

rely on AI for business-critical or safety-relevant decisions

face governance or regulatory scrutiny

are no longer satisfied with “we tested it once and it looked fine”

Typical initiators include:

AI / ML platform teams

SRE and reliability engineering

safety, risk, and compliance groups

applied research and advanced development labs

What You Know at the End

By the end of the Validation Pilot, you will know:

whether runtime instability exists

whether it is measurable without internal access

whether failures are forecastable with usable lead-time

whether FieldLock adds signal beyond existing tooling

whether broader deployment is warranted

Regardless of outcome, you leave with a defensible runtime stability narrative grounded in measurement.

Call to Action

➡️ Request a Validation Pilot

➡️ Speak with the Founding Team

Platform Note

The Validation Pilot is the bridge between:

the science of inference-phase behavior, and

the infrastructure required to operate AI systems with measurable stability

It is the first step in moving from assumed reliability to engineered stability.