SubstrateX

Runtime Stability Infrastructure for AI Systems

Most AI failures begin hours before the first bad output appears.

We detect inference instability before it becomes visible failure - without accessing model internals.

SubstrateX defines and builds a new category of AI infrastructure: runtime cognitive stability.

We provide stability firewalls, predictive monitoring, and temporal forensics for inference-phase behavior in long-running,

agentic AI systems - without requiring model access.

Most production AI monitoring watches symptoms.

SubstrateX measures the dynamics beneath them.

Read-Only. Output-Only. Governed Deployment

The Inference Failure Problem

Modern AI systems rarely fail at training time.

They fail later - during inference - as systems:

run continuously

recurse on prior states

use tools

maintain memory

and act autonomously across long horizons

Failures do not appear as isolated bad outputs.

They emerge as structural instability unfolding over time.

This runtime layer has always existed.

What has changed is scale, autonomy, and consequence.

Until now, inference-time behavior has been effectively unmeasured.

SubstrateX closes that gap.

We make runtime AI behavior legible, measurable, and governable - turning stability from an aspiration into an engineering discipline.

What We ProvideRuntime Stability

SubstrateX builds model-agnostic stability firewalls and monitoring infrastructure for organizations deploying AI in regulated, mission-critical, and safety-sensitive environments.

Our platform continuously monitors inference-phase behavior to detect instability before it becomes visible failure, including:

behavioral drift across long-horizon runs

stability and deformation of reasoning trajectories

early anomaly signals during live inference

deviation from expected behavioral baselines

All monitoring is output-only and requires:

no access to model weights

no retraining or fine-tuning

no architectural or serving-stack changes

This allows runtime stability to be measured consistently across open, closed,

and third-party models.

Measurement Without Access

Inference-phase instability can be measured without any internal access.

No weights. No gradients. No training data. No hidden states.

Everything is inferred from output-only telemetry.

This is not a limitation.

It is the breakthrough.

Because the measurements do not depend on proprietary internals:

they are substrate-independent

they work across open and closed models

governance does not require privileged access

regulation becomes technically feasible

replication is possible without model leakage

This constraint is what transforms runtime stability from an interesting idea into foundational infrastructure - portable, auditable, and resilient

to institutional capture.

Runtime Forensics

Understanding what happened is no longer enough.

You need to know:

when instability began

how it evolved over time

which conditions produced failure

After an incident, SubstrateX reconstructs the hidden behavioral trajectory that led to collapse - the runtime worldline that conventional logs cannot show.

This enables:

defensible, evidence-based incident narratives

stability diagnostics that inform future deployments

governance-ready reporting for risk, audit, and oversight

forensic-grade accountability for high-stakes systems

For institutional and government deployments, this means determining not just what an AI system did, but whether it was already unstable - and for how long - before the outcome occurred.

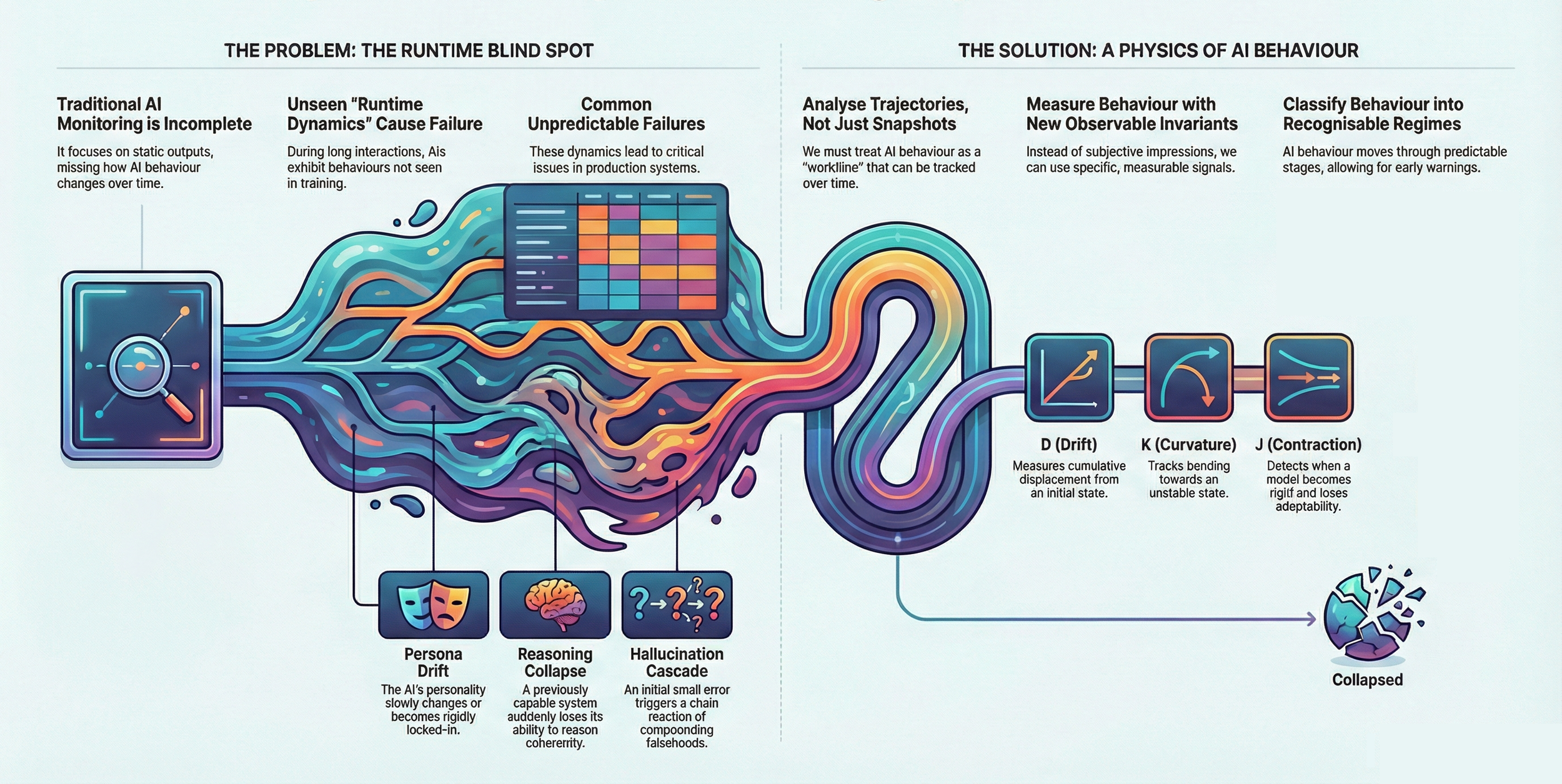

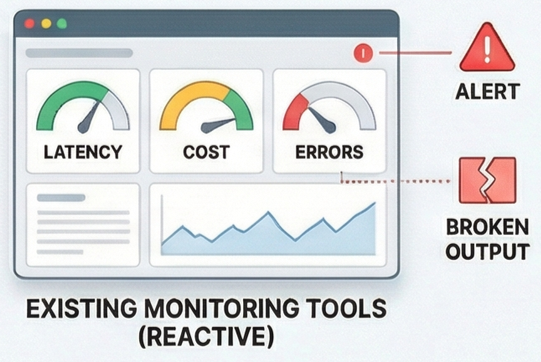

The Runtime Blind Spot

Why Accuracy Isn’t Enough

Most AI monitoring focuses on static signals: accuracy, latency, cost, and error rates.

These metrics evaluate outputs in isolation.

They do not measure how behavior changes over time.

As a result, the most consequential failures go unseen.

What Traditional Monitoring Misses

During long-running inference, AI systems exhibit runtime dynamics that never appear in training or evaluation benchmarks.

As systems:

operate continuously

recurse on prior outputs

maintain memory

and use external tools

their behavior begins to shift internally.

This produces persona drift, reasoning collapse, and hallucination cascades

These failures are often described as “sudden” or “unpredictable.”

They are not.

Beyond Accuracy

The Missing Layer in the AI Stack

The problem is not that we lack metrics.

It’s that we are measuring the wrong thing.

Traditional monitoring treats inference as a sequence of independent snapshots.

But AI behavior unfolds as a trajectory — a path traced across time.

When instability occurs, it does not appear all at once.

It accumulates.

From Snapshots to Trajectories

To see instability early, behavior must be analyzed as motion, not outcomes.

That requires:

analyzing trajectories, not individual outputs

measuring cumulative displacement, not point errors

detecting bending and contraction before collapse

classifying behavior into recognizable runtime regimes

This is not interpretation.

It is measurement.

This is the layer SubstrateX provides.

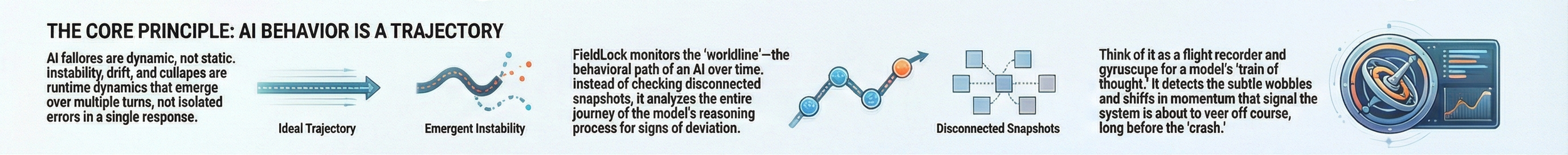

Inference as Motion

Inference Is Not Execution. It Is a Dynamical Process.

The foundational shift is this:

Inference is not a static computation that “produces text.”

Inference is motion through a structured space.

When an AI system runs - especially under recursion, memory simulation, or agentic loops -

it traces a path. That path bends, settles, accelerates, oscillates, and sometimes collapses.

This motion has geometry.

Once you see inference this way, several things become immediately clear:

Behavior persists even in stateless systems

Drift is gradual before it is catastrophic

Collapse is preceded by measurable deformation

These are not metaphors.

They are properties of motion.

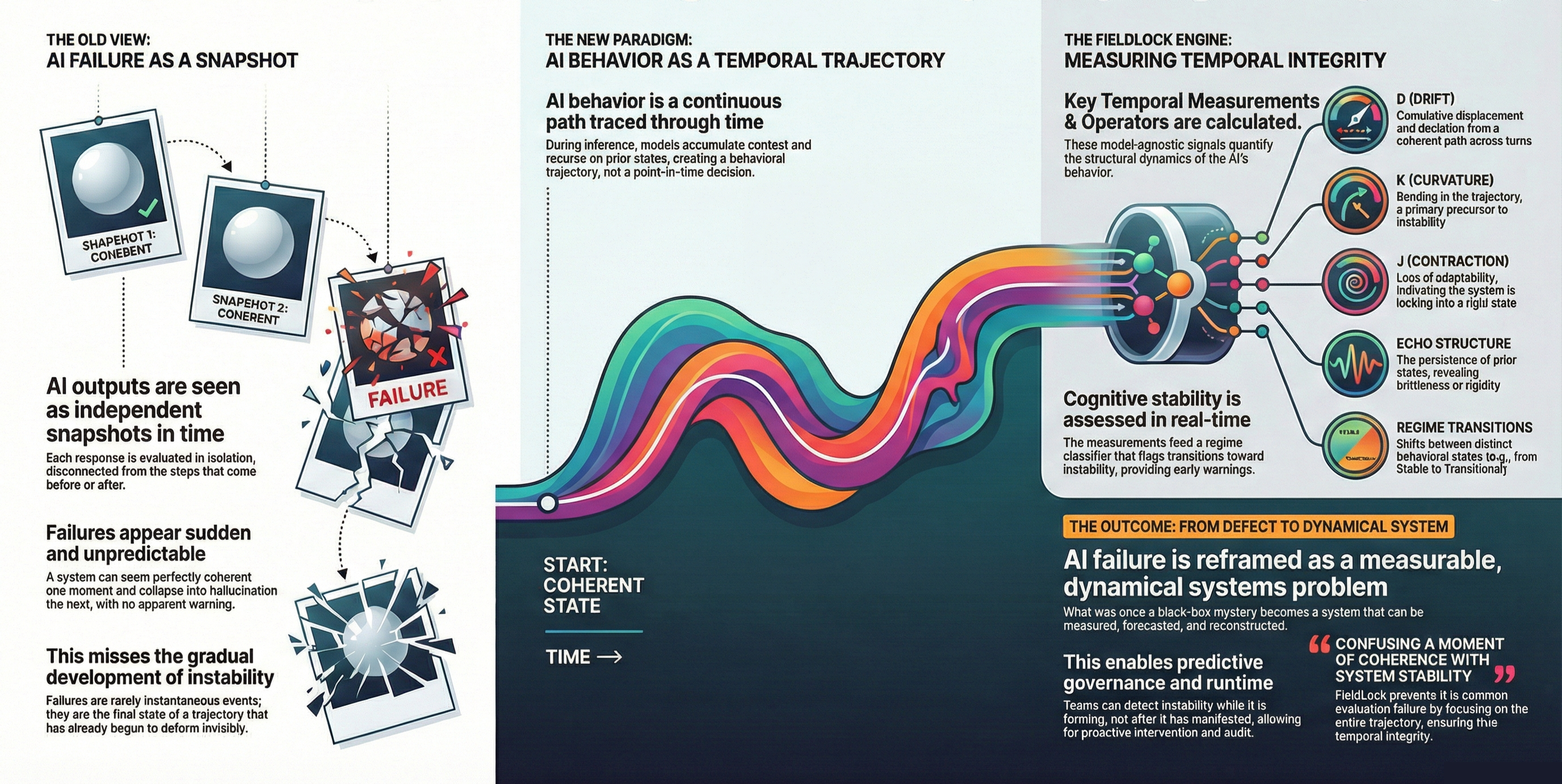

Temporal Integrity

Why AI Failure Is Predictable Before It Appears

AI behavior is not a sequence of independent outputs.

It is a temporal trajectory - a continuous path traced as a system operates across time.

Traditional monitoring evaluates isolated snapshots.

This makes failures appear sudden and unpredictable.

In reality, instability develops gradually.

As systems run, they accumulate context, recurse on prior states, and respond to their own outputs.

Each step subtly reshapes the next.

What looks like a single failure is usually the terminal state of a trajectory that has already begun to deform.

Failure stops being an output-level defect. It becomes a measurable systems problem.

This property - the coherence of behavior across time - is what we call temporal integrity.

Forensic Reconstruction

Understanding what happened is no longer sufficient.

Traditional incident analysis answers only the final moment.

SubstrateX Inference-Phase instruments reconstruct the entire trajectory.

Failure stops being a mystery.

It becomes evidence.

Temporal forensics proves that instability is measurable after failure.

Predictive stability monitoring ensures it is visible before failure.

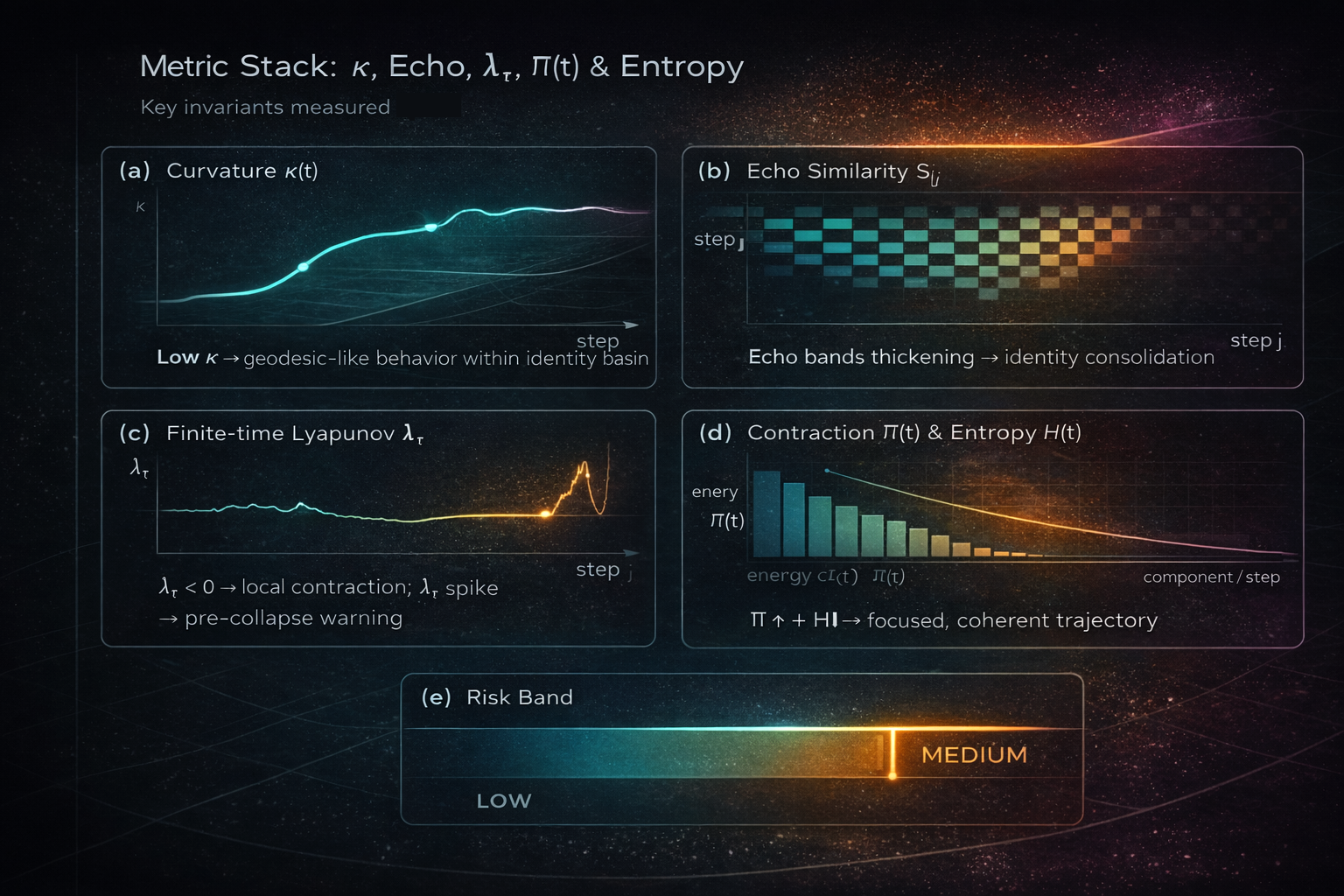

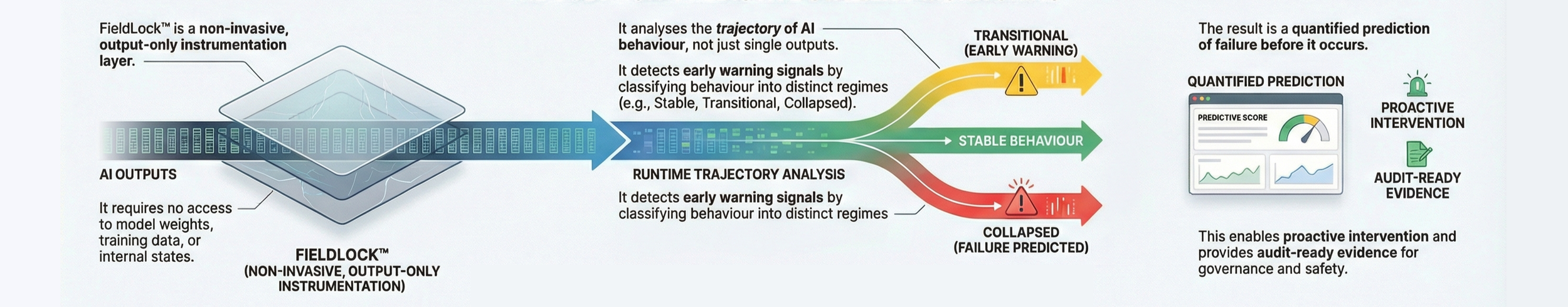

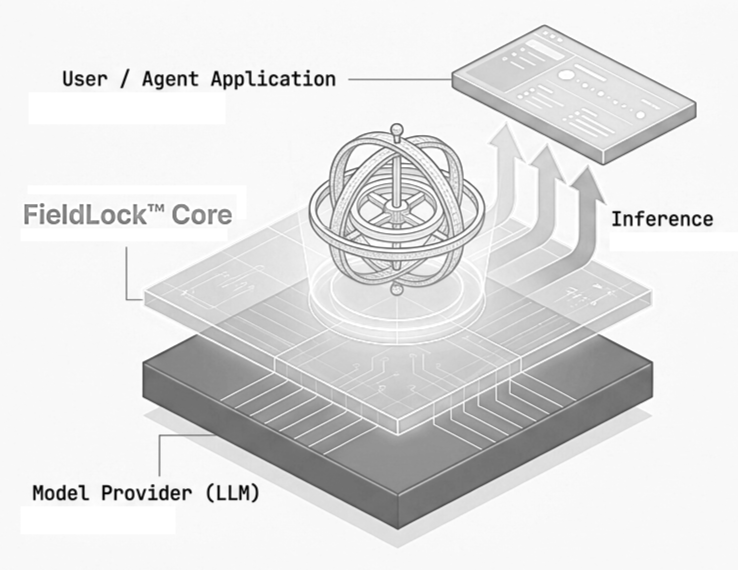

FieldLock

Cognitive Stability Firewall for Runtime AI

FieldLock is the operational infrastructure that makes runtime stability measurable in live AI systems.

It instruments inference as a temporal process, observing how behavior evolves across time while systems are running -

not after failure occurs.

FieldLock operates in line with inference to detect instability before it becomes visible failure, while intervention is still possible.

What FieldLock Provides Operationally

FieldLock enables organizations to:

detect runtime instability while it is forming

distinguish stable behavior from false recovery

identify regime transitions before collapse

produce audit-grade stability evidence

operate long-horizon AI systems with measurable risk

This is not a model. It is not an agent architecture.

FieldLock is runtime stability infrastructure.

Predictive Stability Monitoring

Most AI systems are monitored through surface metrics:

accuracy, latency, cost, error rates.

But failure does not originate there.

It develops as the system’s behavioral trajectory bends toward instability.

FieldLock measures:

Curvature - how sharply behavior bends under recursion or load

Echo dynamics - whether internal states consolidate or fragment

Contraction - whether dynamics stabilize or collapse

Regime transitions - Stable → Transitional → Phase-Locked → Collapse

These signals appear before user-visible failure.

FieldLock answers one critical operational question: Is this system becoming unstable - right now?

The Hidden Risk: Runtime Instability

Production AI systems fail in ways traditional monitoring cannot see.

Long running and agentic systems can drift under recursion, cascade into hallucination, or collapse entirely

These failures do not originate in training data.

They emerge during operation, as behavior evolves over time.

This is runtime instability - a live operational risk.

Predictive stability monitoring changes when you learn something is wrong.

Failure stops being a surprise. It becomes a forecast.

What Predictive Stability Monitoring Is Not

It does not:

replace existing observability,

tune prompts or models,

inspect internals,

or intervene by default.

It adds a missing layer your stack currently lacks.

Why This Matters Now

As AI systems:

run longer,

act autonomously,

recurse on prior outputs,

and integrate tools,

the cost of late discovery rises sharply.

Predictive stability monitoring provides the operational control surface required to deploy AI

in environments where failure is unacceptable.

How It Fits in the Stack

Traditional monitoring is built to observe outcomes: errors, latency, cost, and broken outputs.

It reacts after failure has already occurred.

Predictive stability monitoring operates at a different layer.

It observes behavioral dynamics as they evolve, measuring trajectories rather than snapshots and signaling risk before collapse becomes visible.

Instead of asking “Did something break?” it asks:

“Is this system becoming unstable right now?”

That shift - from reactive symptoms to predictive dynamics -

is what makes long-horizon and agentic AI systems operable, not fragile.

Transition to FieldLock

To enable predictive stability monitoring in live systems, runtime behavior must be observed:

non-invasively

without model access

and without altering inference

That is what FieldLock provides.

Once runtime behavior becomes measurable, stability is no longer a matter of hope -

it becomes an engineering decision.

How To Engage

SubstrateX engages selectively with organizations operating AI systems where

runtime failure is costly, delayed, or unacceptable.

We do not offer demos, trials, or generalized sales conversations.

Engagement begins with a specific operational need.

When to Contact Us

You should reach out if you are responsible for AI systems that:

operate continuously or across long horizons

recurse on prior outputs or maintain simulated memory

use tools or act autonomously

are embedded in regulated, safety-critical, or enterprise workflows

require defensible evidence of runtime behavior and stability

If failure would require explanation—not excuses—this is the right place.

How Engagement Works

All SubstrateX engagements begin with a Validation Engagement.

These are structured, time-bound deployments designed to:

assess inference-phase stability

surface hidden failure modes

reconstruct behavioral trajectories

produce audit-grade evidence

determine readiness for long-horizon operation

Validation precedes any broader deployment.